|

|

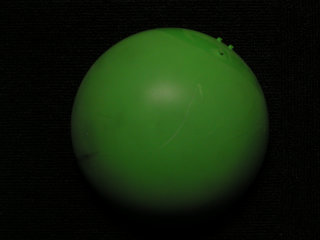

| Input image | Specular-free image |

Specular-free image is a concept first proposed by Tan et al.[1]. Tan used "maximum choromaticity"-"intensity" space for calculation. I, Miyazaki, proposed an another method to generate specular-free image, under the assistance of Tan[2]. My method uses a color space, which I call M space. In this web page, I will introduce my method.

[1] Go to Robby T. Tan's web page.

[2] Go to Daisuke Miyazaki's Specular-free-image web

page.

First of all, I will show you what the specular-free image looks like. Left image is the input image, and right image is the specular-free image calclulated from it.

|

|

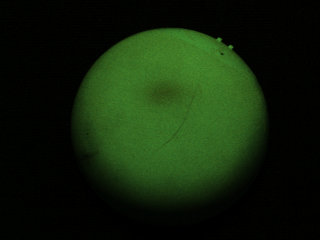

| Input image | Specular-free image |

Characteristics of specular-free image is enumerated below.

Specular-free image is not so good name, maybe. Specular-free

image really has no specular, but specular-free image![]() diffuse image.

Pseudo-diffuse image is more proper name, maybe.

diffuse image.

Pseudo-diffuse image is more proper name, maybe.

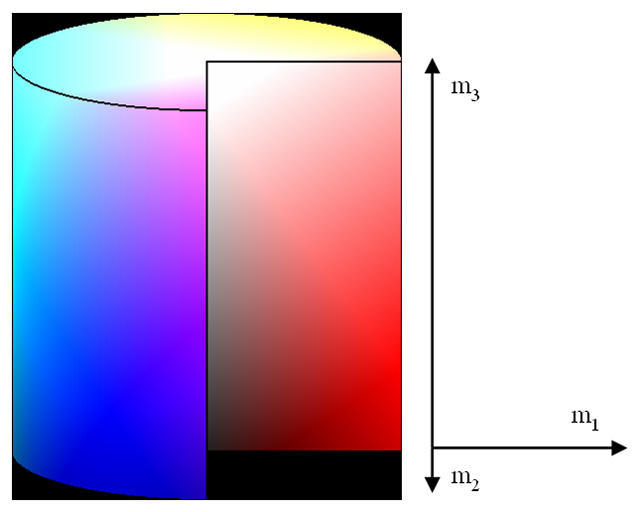

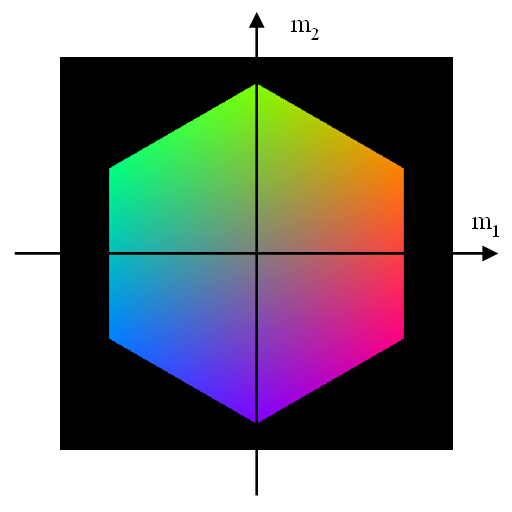

Here, I will show Miyazaki's method. First, transform the pixel value from RGB space to M space.

where (r, g, b) represents the intensity of RGB image. Calculation is done per pixel. (m1, m2, m3) above is the intensity in M image. Indeed an easy calculation!

Hue, Saturation, Intensity is defined from M space (m1, m2, m3) like the above equations. Hue, Saturation, Intensity above is just a conceptual value, and are not perfectly equals to Hue, Saturation, Intensity defined in other color space. Hue, Saturation, Intensity is definable as above if you want to define, that's all. Well, anyway, Hue, Saturation, Intensity is not a physical value but psychological value. If you are not interested in Hue, Saturation, Intensity, please ignore the above equations. These values are not used to calculate specular-free image.

Specular-free image is calculated as above equation. Here, right hand side of the equation is the input image, and left hand side of the equation is the specular-free image. "a" is an arbitrary value. Set "a=1", for example. When "a" is large, the image will saturate. When "a" is small, the image will be darker.

It is easy to retransform from M space to RGB space. Just calculate the inverse matrix of the first equation. Result is shown above.

Note that you should calculate by not integer type but floating point type. (For 256 level image,) normalize the image so as that the maximum value of R or G or B will equal to 255, and transform to integer value and output the RGB image. Specular-free image corrupts the albedo, so normalization is not a bad choice.

There is no meaning for the letter M. Well, the capital letter of Miyazaki is M. In the field of color analysis, often, R G B C I D S H S V Y U V Q L, are used, and M is not so frequently used. Unfortunately, M is used for Magenta of CMY, but CMY color space is not so frequently used in the field of computer vision.

I will introduce how to make an image under white light which is taken under non-white light. Namely, white balancing. Camera has an auto white-balancing function, but it does not always work well, so please use the method shown here. Multiple lights are allowed, but they should be the same color. The same light color means the same product and the same model, --- different products have different colors even if they look like the same. If possible, it is better to use the same production lot, same days after manufactured, same time used from purchase, same time from switching on. Do not put any other objects close to the target object in order to avoid interreflections. The interreflection from colored object cannot be removed. The interreflection from black, white, or gray albedo can be removed. More strictly, the reflection from colored object reflects at the white (=gray/black) object, and again reflects at the colored object cannot be removed, but it can be ignored. Put only black diffuse objects around the target object. Black = reflectance is low = intereflection is small. It is not a big problem for human perception when the interreflection occurs. Interreflection is unwanted for the analysis in PC, but human usually does not perceive a small interreflection. Though it is important to consider interreflection for analyzing specular-free image in PC, the interrefleciton does not to be considered if you just want to show specular-free image to human. Though I wrote that the compensation is needed if the light is not white, I recomend you to use nearly-white light. For example, if you lit the object with completely red light, you only obtain the R information out of RGB.

Prepare planar object with completely white Lambertian surface. Sometimes it is gifted when you bought a camera. This is also called a white referance. You can also use the white color patch of Macbeth chart. You can also use plaster board. Maybe. Avoid using white paper. It is difficult to find completely white paper without specularity.

Illuminate the perfect white diffuse surface with the light which you use in the experiment, and observe it by the camera. The parameters of light and camera must be the same between the experiment and the calibration. But you can change the shutter spead, and you can also put ND filter, and you can change the iris. These setting should be fixed, and should not be automatically changed.

Suppose that the intensity of the perfece white diffuse surface is as follows.

![]()

Normalize this.

Next, observe the target object under same light, same camera parameters. Suppose that the intensity of it is as follows.

![]()

The image under white light can be calculated as follows.

The calculation is quite easy. It is easier than stereo calibration. Many algorithms are proposed to make the image under white light without using perfect white diffuse surface. Many papers are hit if query "color constancy" in search website. Some are also proposed in Ikeuchi laboratory. See Here.

Image below is a successful result.

|

|

| Input image | Specular-free image (SUCCESS) |

Image below is when the light was not white.

|

|

| Input image | Specular-free image (FAIL) |

Image below is when the gamma was not 1.

|

|

| Input image | Specular-free image (FAIL) |

Here, I will describe the relation between speuclar-free image and diffuse image.

As I said before, specular-free image only changes albedo. This means that the shading does not change. I will prove it. I will also prove that the specular is wholly removed.

Consider a certain pixel.

Light sources are white, so specular is also white (NIR assumption). So specular is represented as the following.

![]()

where "s" is the power of specular.

Assume the Lambert surface, then diffuse is represented as follows.

where cos![]() represents the shading which derived from the Lambert law. (

represents the shading which derived from the Lambert law. (![]() r,

r, ![]() g,

g, ![]() b)

represents diffuse albedo.

b)

represents diffuse albedo.

So, RGB value is represented as follows.

Now calculate the specular-free image. Intermediate calculation will be,

and finally, RGB value of specular-free image will be as follows.

As shown above,

You can make a specular-free image which has similar characteristic not only with Miyazaki's M space but also with Bajcsy's S space, Ohta's (I1, I2, I3) space, or Hurvich's opponent responses model. I will not present the calculation method here. Please derive with yourself. Other color spaces such as HSV space does not produce an image which has above characteristics.

[1] R. Bajcsy, S. W. Lee, A. Leonardis, "Detection of

diffuse and specular interface reflections by color image

segmentation," Int'l J. Computer Vision, Vol.17, No.3,

pp.249-272, 1996.

[2] Y. Ohta, T. Kanade, T. Sakai, "Color Information for

Region Segmentation," Computer Graphics and Image

Processing, Vol.13, pp.222-241, 1980.

[3] L. M. Hurvich, Color Vision, Sinauer Associates, 1981.

Tan used specular-free image in order to make diffuse image.

But, speuclar-free image itself has many applications. One of them is "object recognition."

Specularity is the problem in object recognition. Object is not recognized well if there is some specularities. In addition, object recognition application often require realtime computation. Therefore, specular-free image is useful.

Some people might think, "Wait! It is true that the specularity disappears in specular-free image and it is calculated in realtime. But the albedo of specular-free image is different from the albedo of actual object! So, you cannot do object recognition!" Please be carm. The answer is easy. Just process through specular-free image only. Use specular-free image for creating database(template). You can apply learning algorithm to the specular-free image itself. You can do template matching to the specular-free image by using specular-free template. The template will well match even if the albedo is different.

Specular-free image also has a shading. If the intensity or the light position of the specular-free image you want to analyze change, the learned specular-free image (as a database/template) does not completely coincide.

If you want to make the object recognition robust to shading, you can use hue image. That is, you can use the image calculated by

.

.

It means that, you do template matching with the image whose pixel has (not 3 elements but) 2 elements.

The degree of freedome of hue is 1 for each pixel. The degree of freedome of specular-free image is 2 for each pixel.

If you want to convert again the hue image into RGB image, you can calculate as follows. Here, I assume that the intensity has 256 levels.

Then, calculate RGB image with abovementioned conversion method.

Tan proposed a method to calculate albedo from specular-free image, so one choice is to use such method. It needs iteration.

[1] Go to Robby T. Tan's web page.

[2] Go to CVL Publication web page.